In 2024, the AI landscape is undergoing a transformative shift with the emergence of multimodal models. Unlike traditional AI models that specialize in a single data type, multimodal models seamlessly integrate various data forms—text, images, audio, and video—to create more sophisticated and versatile AI applications.

Historically, AI models such as OpenAI’s DALL-E have demonstrated the potential of converting text inputs into visual outputs. The field is now advancing further with models like Google’s Gemini, which exemplify the future of AI by simultaneously training on and generating multiple data types.

The implications of these advancements are vast. For example, in healthcare, combining speech, text, and images could enhance diagnostic accuracy. Experts predict that as multimodal models become more prevalent, their ability to enable advanced applications will be significant.

Large language models like GPT-4 and Meta’s LLaMA 2 have already set new standards in AI capabilities. With the rise of multimodal AI, technology is now expanding to include mixed content generated from text, audio, image, and video sources.

The introduction of open models like the Large Language and Vision Assistant (LLava) opens up exciting possibilities, signaling a new era in AI innovation.

Embrace the Rise of Small, Powerful Language Models (SLMs)

In 2024, the spotlight is shifting towards Small Language Models (SLMs), marking a significant paradigm shift in AI. While current-generation large language models (LLMs) boast billions of parameters, SLMs are emerging as a more efficient and manageable alternative. Notable examples include Microsoft’s PHI-2 and Mistral 7B.

What Are Small Language Models?

SLMs, typically defined as models with under 100 million parameters, offer a stark contrast to their massive counterparts, which can exceed 100 billion parameters. Some SLMs are even streamlined to just 1 million parameters, redefining the concept of powerful language models.

Advantages of SLMs

SLMs provide several compelling benefits:

- Efficiency: Faster inference speeds and reduced memory requirements.

- Cost-Effectiveness: Lower computational resources lead to significant cost savings. For instance, while developing GPT-3 cost tens of millions of dollars, SLMs can be created and deployed at a fraction of that cost.

- Customizability: Ideal for specialized domains like finance and entertainment, SLMs can classify transactions, analyze sentiment, and systematize unstructured data in finance, or assist in scriptwriting and enhance interactive gaming experiences in entertainment.

Democratizing AI with SLMs

Platforms like Hugging Face are democratizing the development of custom SLMs, enabling businesses to tailor models to their specific needs. The flexibility and efficiency of SLMs allow for rapid iteration and adaptation to niche requirements.

Explore how Small Language Models can revolutionize your business operations with their unparalleled efficiency, cost-effectiveness, and adaptability.

The Rise of Autonomous AI Agents

Autonomous AI agents are set to be a major trend in 2024. These advanced software programs, designed to achieve specific goals with minimal human intervention, represent a significant advancement from traditional prompt-based AI systems. Their ability to autonomously generate content marks a new era in AI technology.

These agents learn from data, adapt to new environments, and make independent decisions. OpenAI’s custom GPTs are prime examples, showcasing how these agents can operate effectively without constant human oversight.

Frameworks like LangChain and LlamaIndex, built on large language models (LLMs), have been crucial in developing these agents. In 2024, we anticipate new frameworks that will leverage multimodal AI, further enhancing the capabilities of autonomous agents.

Autonomous agents are poised to revolutionize customer experiences across industries such as travel, hospitality, retail, and education. By reducing the need for human intervention, they promise significant cost savings and improved efficiency. Their ability to provide contextualized responses and actions makes them particularly valuable in these settings.

Looking forward, we expect a new generation of autonomous agents that can interpret higher-level intentions and execute a series of sub-actions for the user. This evolution will lead to AI systems with a deeper understanding of user intent, greatly enhancing their utility and effectiveness.

Open-Source AI Models to Rival Proprietary Counterparts

In 2024, open-source AI models are poised for significant advancements, potentially challenging proprietary models in performance and applications. The comparison between open-source and proprietary AI models is complex, depending on factors like specific use cases, development resources, and training data quality.

In 2023, open-source models such as Meta’s Llama 2 70B, Falcon 180B, and Mistral AI’s Mixtral-8x7B gained significant attention. Their performance, often comparable to proprietary models like GPT-3.5, Claude 2, and Jurassic-2, highlighted a notable shift in the AI landscape. This progress indicates a narrowing gap between open-source and proprietary models in terms of capabilities and practical applications.

The trend suggests that this gap will continue to close. Open-source models are becoming increasingly attractive to enterprises, particularly those seeking hybrid or on-premises solutions. Their flexibility and accessibility provide a compelling alternative to more restrictive proprietary models.

As we advance through 2024, we expect new releases from Meta, Mistral, and other emerging AI leaders to further bridge this gap. The availability of advanced open-source models as APIs will drive their adoption and integration into various business processes and applications, promoting a more competitive and innovative AI ecosystem.

Shift from Cloud to On-Device AI

In 2024, the generative AI landscape is moving from cloud-centric solutions to on-device implementations. This transition is primarily driven by the need to enhance privacy, reduce latency, and lower costs. Personal devices such as smartphones, PCs, vehicles, and IoT gadgets are becoming pivotal for multimodal generative AI models.

This evolution not only improves privacy and personalization but also broadens access to AI capabilities for both consumers and businesses. By operating large generative AI models on local devices, we see a significant shift from traditional cloud-based systems to hybrid or on-device AI solutions. This inevitable change offers next-level, privacy-focused, and personalized AI experiences while reducing cloud-related expenses for developers.

Smartphones, in particular, will leverage multimodal generative AI models integrated with on-device sensor data. These advanced AI assistants, capable of processing and generating text, voice, images, and even videos on the device, promise more natural and engaging user experiences.

AI PCs “Super Cycle”: A New Era of AI-Integrated Computing

2024 is set to be a groundbreaking year for the PC market, fueled by a “super cycle” of laptop and PC replacements driven by advancements in AI technology. This surge is propelled by Microsoft’s development of Intelligent PCs and AI assistants like Copilot, marking a significant shift towards AI-integrated computing.

Major players like AMD and Intel have announced AI-enabled processors, including AMD’s Ryzen 8040 and Intel’s Ultra Core processors, featuring integrated neural processing units (NPUs) for efficient data processing and machine learning tasks. These processors enhance power-efficient AI acceleration by distributing tasks across CPU, GPU, and NPU. Qualcomm’s Snapdragon X Elite processor, capable of running generative AI models on-device, further accelerates this shift. Additionally, Microsoft’s upcoming Windows version optimized for local AI processing will bolster this transformation.

Analysts predict that AI PCs will not only rejuvenate PC sales but also increase the average selling price of PCs. With a global installed base of 1.5 billion PCs, the market potential is immense. AI PCs are expected to deliver new experiences, such as personalized assistance and the ability to run generative AI applications using smaller data sets.

For enterprises, this means the capability to run GenAI models with proprietary data locally, enhancing security. For consumers, it translates to more interactive experiences like real-time language translation and more lifelike non-playable characters in video games.

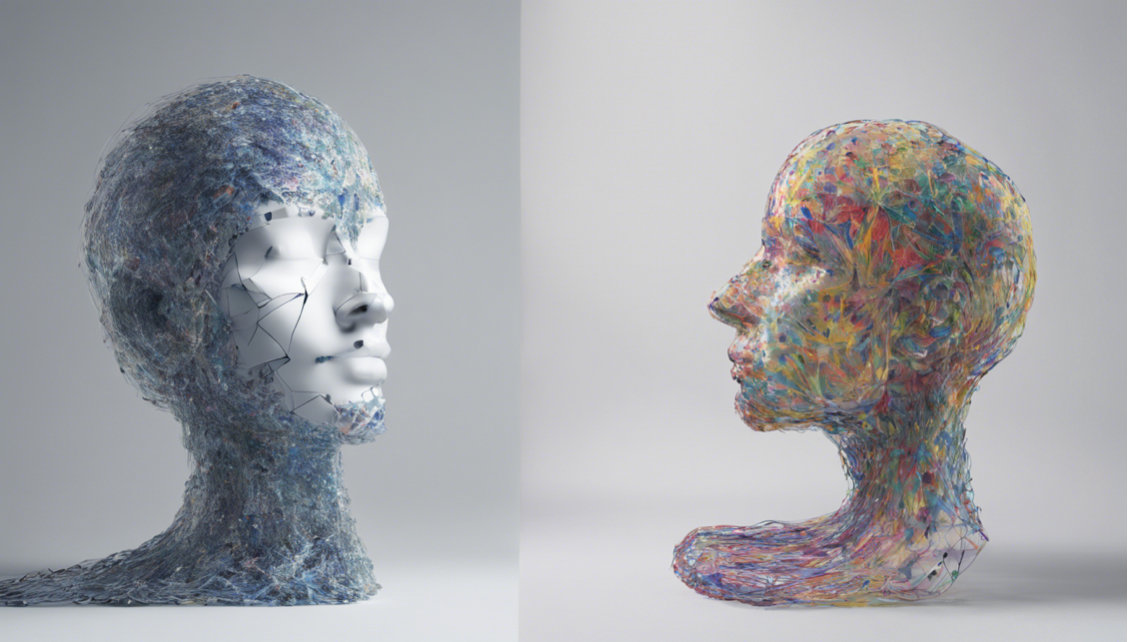

Generative AI Revolutionizing Art and Design

In 2024, generative AI will significantly enhance productivity, reduce time-to-market, and improve efficiency for creative professionals. This technology augments human creativity rather than replacing it, allowing teams to focus more on strategy and creative collaboration by automating repetitive tasks.

With advanced tools for image, audio, and video generation, creatives and marketers can tailor content to audience preferences more effectively, revolutionizing advertising strategies. In design, especially for physical products and services, generative AI is being rapidly adopted. Tools like those offered by Autodesk allow designers to input requirements and materials to receive blueprints and recipes, facilitating the rapid generation of multiple prototypes and testing digital twins in parallel. This leads to more robust, effective, and sustainable product designs.

By automating the initial stages of the design process, generative AI enables designers to explore a wider range of possibilities and focus on refining the best options, driving innovation and efficiency in creative industries.

The Rise of Mixed, Virtual, and Extended Reality Experiences

2024 is poised to be a pivotal year for Mixed Reality (MR), Virtual Reality (VR), and Extended Reality (XR) as these technologies move from niche applications to mainstream adoption. This shift is driven by rapid advancements in spatial computing, the miniaturization of device sizes, and the availability of affordable hardware options like Meta’s Quest 3 and Ray-Ban Meta.

Generative AI tools are set to significantly enhance and scale XR experiences, making the creation of three-dimensional (3D) content more accessible. This democratization of technology means that more creators and developers can build immersive virtual worlds, regardless of their technical skills or resources. Voice interfaces powered by generative AI will provide a more natural and intuitive mode of interaction within XR environments. Additionally, personal assistants and lifelike 3D avatars, enabled by generative AI, will become commonplace in XR spaces.

The Age of Bring Your Own AI (BYOAI)

Initially, major companies like Samsung, JPMorgan Chase, Apple, and Microsoft were cautious about allowing employee access to AI tools like ChatGPT due to concerns about data privacy and security. However, 2024 is likely to see a shift towards a more open attitude regarding generative AI in the workplace. The realization that many employees are already experimenting with AI in their professional and personal lives is driving this change.

A key emerging trend is “Bring Your Own AI” (BYOAI), where employees utilize mainstream or experimental AI services, such as ChatGPT or DALL-E, to enhance productivity in business tasks. Companies are increasingly recognizing the need to invest in AI and promote its safe use among employees. For instance, BP, the British multinational oil and gas giant, is actively integrating generative and classical AI technologies into its culture with an ethos of “AI for everyone.” This includes using AI to improve software engineering processes, enabling a single engineer to review code for a larger number of colleagues.

The goal is to empower employees and citizen developers, regardless of their technical expertise, to build, publish, share, and reuse their own AI tools. The lower barriers to entry with technologies like large language models (LLMs) and generative AI are making this level of democratization more achievable than ever.

Conclusion: Generative AI’s Groundbreaking Evolution

The advancements in Generative AI dazzled us in 2023, but 2024 is set to completely rewrite our reality. This year, generative AI isn’t just a fleeting trend; it’s a fundamental evolution transforming our work and lives. Picture it as AI’s leap from scribbling notes to painting masterpieces.

At O2 Technologies, we believe 2024 is the year when powerful mini-models will fit in our pockets and AI colleagues will become integral parts of our offices. This transformative shift is where we’ll look back and say, “That changed everything.”

Stay ahead with O2 Technologies and embrace the future of generative AI.